In a landmark move to combat fraudulent robocall scams, the Federal Communications Commission (FCC) has declared AI-generated voices in automated calls illegal under U.S. telemarketing laws.

This decision comes in response to a surge in deceptive robocall campaigns exploiting AI technology to defraud unsuspecting recipients.

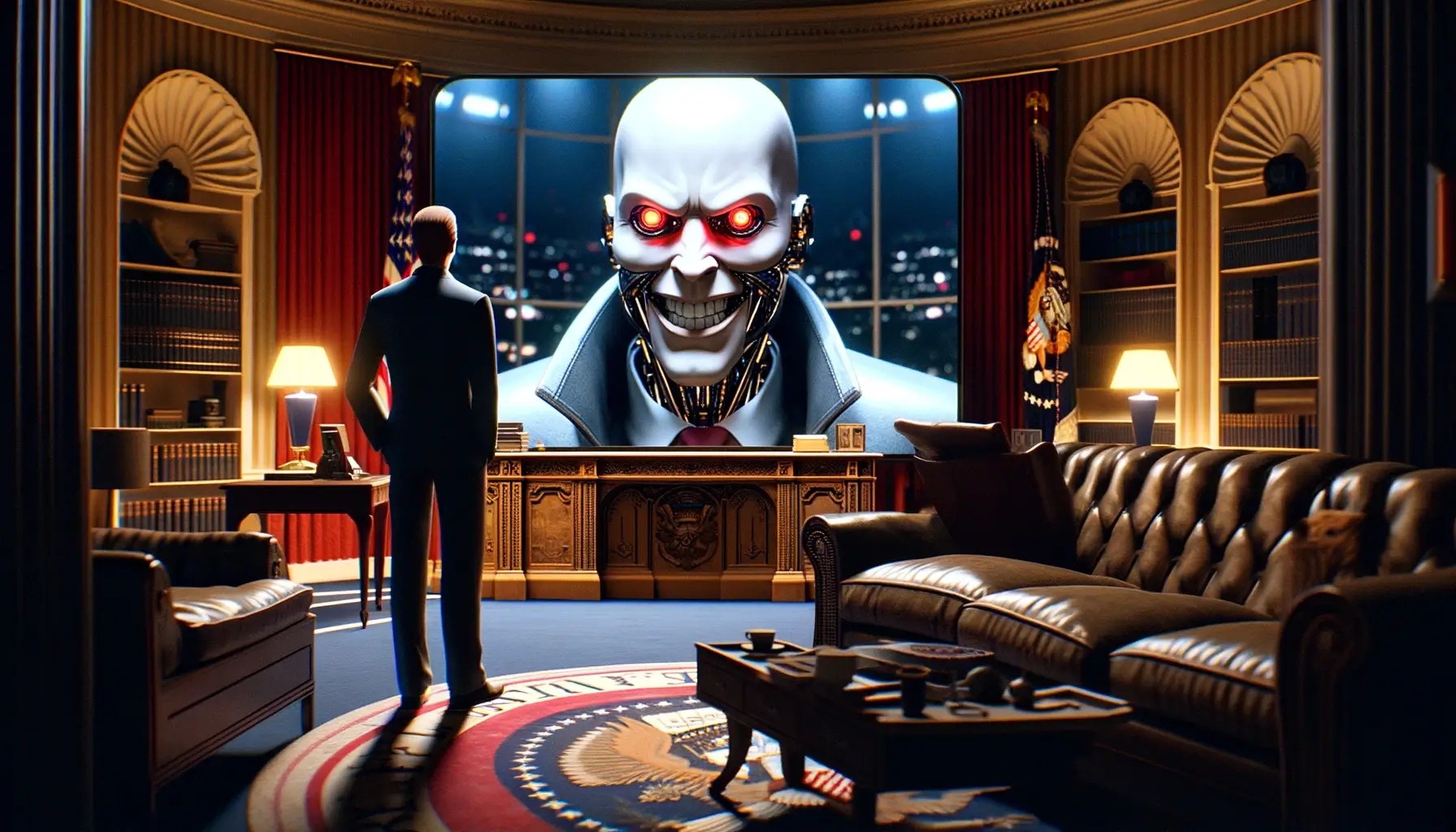

The FCC’s prohibition follows a recent incident where residents of New Hampshire received fabricated voice messages impersonating U.S. President Joe Biden, urging them to abstain from voting in the state’s primary election.

This brazen misuse of AI technology underscores the urgency of regulatory measures to curb malicious robocall activities.

I stand with 50 attorneys general in pushing back against a company that allegedly used AI to impersonate the President in scam robocalls ahead of the New Hampshire primary. Deceptive practices such as this have no place in our democracy. https://t.co/CqucNaEQGn pic.twitter.com/ql4FQzutdl

— AZ Attorney General Kris Mayes (@AZAGMayes) February 8, 2024

Strengthening Consumer Protections: Implications of the FCC Ruling

Under the Telephone Consumer Protection Act (TCPA), which governs telemarketing practices in the U.S., robocall scams were already prohibited.

However, the FCC’s ruling extends this prohibition to encompass “voice cloning technology” utilized in AI-generated robocalls, effectively closing regulatory loopholes exploited by scammers.

“This would give State Attorneys General across the country new tools to go after bad actors behind these nefarious robocalls,” as stated by the FCC.

We're proud to join in this effort to protect consumers from AI-generated robocalls with a cease-and-desist letter sent to the Texas-based company in question. https://t.co/qFtpf7eR2X https://t.co/ki2hVhH9Fv

— The FCC (@FCC) February 7, 2024

FCC Chair’s Stance: Combating Robocall Fraud

FCC Chair Jessica Rosenworcel emphasizes the agency’s commitment to safeguarding consumers from predatory robocall practices.

By outlawing AI-generated voices in robocalls, the FCC aims to disrupt fraudulent schemes that exploit vulnerable individuals, impersonate public figures, and disseminate false information.

“Bad actors are using AI-generated voices in unsolicited robocalls to extort vulnerable family members, imitate celebrities, and misinform voters.

We’re putting the fraudsters behind these robocalls on notice,” said FCC chair Jessica Rosenworcel.

Addressing Technological Challenges: Enforcement and Compliance

While the FCC’s ruling represents a significant step in combating robocall fraud, challenges remain in enforcing compliance and prosecuting offenders.

The proliferation of AI-powered voice technology presents ongoing regulatory hurdles, necessitating collaborative efforts between law enforcement agencies, telecommunications providers, and technology companies.

Legal Ramifications: Enforcement Actions Against Scammers

The FCC’s ruling empowers state authorities to take legal action against perpetrators of AI-generated robocall scams, providing a formidable deterrent against illicit telemarketing practices.

Law enforcement agencies can now pursue charges against individuals and entities involved in orchestrating fraudulent robocall campaigns, holding them accountable for their deceptive actions.

Safeguarding Consumers in the Digital Age

As technology evolves, regulatory frameworks must adapt to address emerging threats to consumer safety and privacy.

The FCC’s ban on AI-generated voice robocalls signals a proactive approach to combatting fraud and deception in telecommunications, underscoring the importance of robust regulatory oversight in safeguarding the public from predatory practices.

Moving forward, continued vigilance and collaboration will be essential in preserving the integrity of communication networks and protecting consumers from exploitation.